AdWords Experiments helps advertisers do Campaign A/B testing without making changes to the original campaign. If you have tried it out, then you know the perks of it. But there are a couple of aspects unknown yet important to know, for successful execution.

These are lesser known features about which very little insight is available online. You can only come across these features when you create or run an experiment of your own. Knowing about these quirks lets you stay prepared about how your experiments will behave and you can in advance be prepared with what to expect.

We hope these pointers help you plan your AdWords campaign experiments better and not be surprised when you discover them around the mill.

1. You cannot “pause” your AdWords Campaign Experiments

Experiments don’t have a pause option. If you have specified an ‘End Date’ while setting up your Experiment then it ends on that date. You can alter these dates at any point of time but if you wish to put the experiment on hold after having activated it, you cannot do so. The only option left is to discontinue the experiment.

For instance, if a product has got out of stock you might want to pause all campaigns and their experiments till the time the item is in-stock. Similarly, during a website upgradation similar action might be required. While the campaigns can be paused, the experiments can only be ended.

2. Deactivated/Completed Experiment cannot be activated again

If you have set an end date to your Experiment, then after the experiment ends the same cannot be reactivated. If by the end date, you don’t have any significant results then the a/b testing effort wasn’t much fruitful. You have to launch a new experiment. It is ideal to wait for an experiment to at least have a substantial amount of data that can be used to compare its performance with the original campaign. In order to do so, it is advisable not to set an end date to your experiment.

3. AdWords Experiments work at the campaign level

Experiments can be run at the campaign level and by no means can the split testing be conducted at the account level. The statistical significance for each campaign is calculated separately and cannot be clubbed with the other campaign experiments. This is so because each experiment has its own learning graph and no two campaign experiments can bear the same results. So, if you are making similar bidding changes to two campaigns in your account, then each will behave differently and will be reported separately.

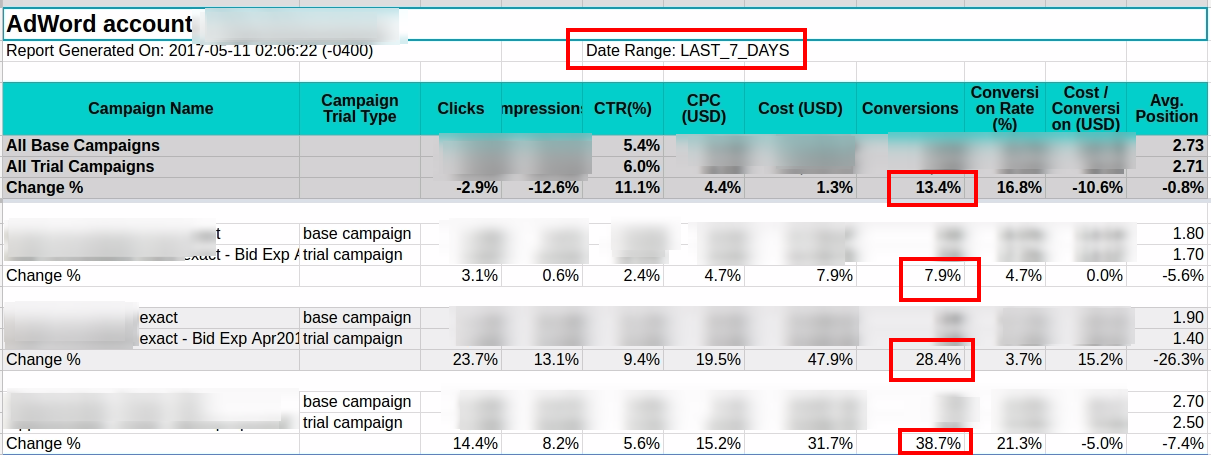

If you wish to see the cumulative data of all your live experiments then use our script that lets you Track Performance Of Your AdWords Experiments.

4. Traffic split once specified, cannot be altered

While creating an experiment, the only thing that you can edit is the date of the experiment. Another crucial component of the experiment, the traffic split, once entered cannot be modified. So, for instance, if your earlier split was 30% for the experiment and after a while, you realize that it is not driving considerable statistical significance, you cannot edit it. The only way to make the change is to again recreate your experiment which makes all the previous efforts irrelevant

5. AdWords Experiments need a learning period

When an experiment starts running, it doesn’t carry forward learning from historical data of the original campaign. So, key insights from your keywords, search queries, quality score, etc. will not be used. The experiment starts creating its own learning graph and basically starts from scratch. It might take a couple of weeks for the experiment to reach stability and during this time period do not get demotivated with its possible underperformance. Once the learning period is over, which could be 10-15 days, you should discard this data while comparing its performance with the original campaign.

6. Budget Sufficiency

The budget allocated to your original campaign is also shared with the experiment. As, the experiment would have a different set of setting than the original campaign, running them both together increases the chances of the budget getting depleted fast. To control this and ensure that your campaign and experiments continue running alongside, make sure that your budget is sufficient to meet its demands.

Alternately you can choose the budget to be consumed evenly over time, however, that might still not help in stopping insufficient budget from getting consumed.

7. Impressions another measurable metric

Though you might have split traffic equally between your experiment and original campaign, however, it is crucial to know that the split happens for every auction. Google decides whether to make a campaign or experiment active basis historical pattern while trying to maintain the ratio of split before each auction.

The auction attributed to either the campaign or experiment can only be won if the overall ad rank is high. So, for instance, if Google decides to show your experiment with a bid of $5 then it has a higher probability of winning an auction than when the original campaign with a bid of $1 is shown. This results in significant difference in the impressions received by the experiment in comparison to the original campaign.

So, while drawing comparisons, impressions become another crucial metric to compare and you can derive insights from it. For instance, if you increased your bids in your experiment than your original bid, impressions become one metric to compare other than CTR, Conversions, etc. If other metrics appear to be the same, then impressions can become a deciding factor on how the change has impacted.

8. One Experiment per Campaign

You can create only one active experiment at a time for your campaign/ draft. This might restrict you if you wish to test different variations of the same metric. For example, you want to test the impact of different CPA targets. Your original campaign runs with a CPA of $10, while you want to test the impact on the campaign if the CPAs are set as $12 and $15. Ideally, you wish to compare CPA of $10 with both $12 and $15, however, you are restricted to test either $10 vs $12 or $10 vs $15. You would have to run two experiments separately, with one experiment running with $12 CPA and when that experiment ends, create another experiment with $15 CPA. This is too time-consuming if you wish to test out different options as only one experiment is eligible for a campaign.

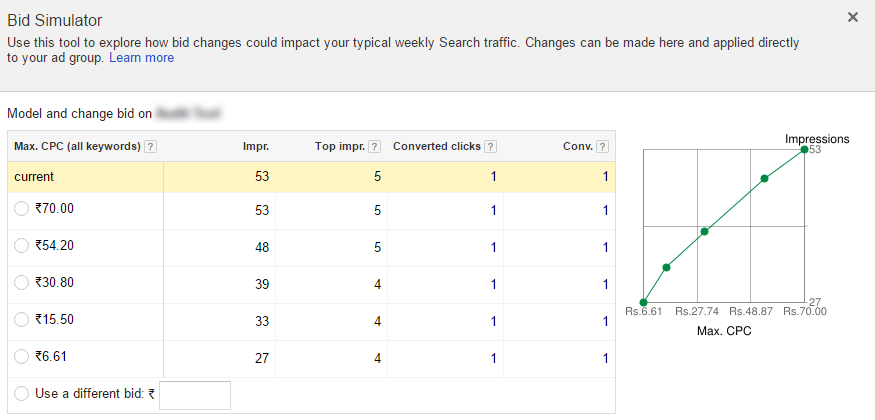

9. Bid Simulators option unavailable

With bid simulators, you can determine how different bids impact your ads performance. As the budget allocated is shared between the original campaign and the experiment, these simulators stop working if there is an active experiment in your campaign. Your bid simulators can run again once you experiment is no longer active and has been transferred to any of the other statuses (Applied, New Campaign or Removed)

[Update 27th July 2018] – Here is a new quirk we recently came across and hence adding in this post –

Campaigns Using Shared Budget Ineligible For Experiments

Another lesser know fact about experiments is that experiments are not supported for campaigns using shared budget. It means, that if your campaign is using a shared budget then you will not be able to create an experiment to do the split testing.

You need to thus select a campaign which has a budget of its own to set an experiment, as campaign with shared budget is most likely to affect other campaigns that are sharing the same budget.

We really wanted to make this a Buzzfeed-style “10 quirks about AdWords Experiments that you don’t know.” But, we ran out of ideas when we were this close. If you happen to know any special behavior of Experiments that’s not listed here, please share it in the comment. We will steal it, put it here and give you the credit for that idea.

Let us know how these quirks helped you improve your AdWords Campaign Experiments experience.

Related Links:

Pabl

Hey,

Awesome article!

I would like to ask one qs, if possible (:

Not sure, if you mentioned this in point 8, but is it recommended that I test multiple variables under 1 experiment. This is purely because of the “1 experiment per campaign rule”.

For example, I change the final urls of many ad groups & then I analyse the change per ad group.

Let me know,

Thanks,

Pablo